News

2019-05-31 Initial publication of the Wisdome contest webpage

2019-10-01 Updated information on the call for participation and technical infos

2020-02-10 Added submission information and the link to the precision system. Updated the deadlines.

2020-04-14 Added information on COVID-19 and moving the Wisdome contest to Eurographics 2021

UPDATE: COVID-19 and Wisdome Contest 2020

Following EGEV2020 it has been decided not to host the Wisdome Contest in Norrköping this year. Instead the contest will most likely be held in conjunction with Eurographics 2021 in Vienna.

Further details will be provided over the coming days as we work out the details. We look forward to your participation next year.

In conjunction with the joint conferences Eurographics & EuroVis in Norrköping, Sweden in 2020, we invite you to join the co-located Wisdome Contest. The goal of the Wisdome contest is to be a festival of scientific and/or data-inspired dome movies for a public audience. The Visualization Center C in Norrköping houses one of the premier dome theatres in all of Europe and is operated in close conjunction with Linköping University, providing an optimal constellation to provide a venue for communicating scientific content to a wide audience using this display method. We encourage submissions from a wide array of backgrounds, ranging from academic institutions to digital science centers and beyond. Movie submissions should be targeted at the general public and aim to educate about science in general, a particular scientific aspect or discovery, or the Scientific Method in general. These submissions should be predominantly aimed at the educated general public that might visit a science center and wishes to learn more about the world around us. Submissions will be assessed by the referees according to the following criteria,

- creative use of the dome surface, sound and music,

- the visual quality,

- creativity,

- the story telling quality,

- public engagement, and

- technical excellence.

All accepted submissions are invited to be included in a “showreel” of the contest that will be distributed to several planetaria and science centers around the world, which will further increase the visibility of the submissions.

Call for Submissions

Submissions consist of fulldome movie and an accompanying document of up to 2 pages in length, with an optional third page for references. This document must use the two-column style used at the EuroVis conference, and should include short paragraphs addressing the following aspects:

- description of the submission category and targeted audience, for example: explanatory, exploratory, artistic, or commercial,

- short explanations how the submission addresses the evaluation criteria,

- short description of the data that has been used,

- short description of the rendering and visualization techniques used,

- summary of your story

The pre-rendered fulldome movie should be 3-10 minutes long and be submitted as a monoscopic 180° dome master in either 4096×4096 or 8192×8182 resolution using either the H.264 or H.265 encodings with embedded audio, if desired (see technical information below for more details). Audio formats of stereo, 5.1, or 7.1 are recommended.

Assessment Criteria

Submissions will be assessed by the refereers according to the following criteria:

- Use of the dome surface; How is the learners experience enhanced by the use of a planetarium dome surface compared to watching the same content on a flat screen display. Aspects of this criterion are the increase of available display surface area, the ability of creating a shared virtual reality space between all participants, and the ability to present a different viewpoint to different learners.

- Use of sound and music; How both sounds and music are used in the narration to guide, explain, and engage the audience. And, how does technical aspects such as sound quality, mixing, and the use of directional sound and music, change and impact the experience and influences the consumption of the movie.

- Visual quality; This criterion captures the overall quality of the visuals. Please note that this does not include, for example. the use of special effects without having an impact on the story telling, but rather captures the overall theme of the submission

- Creativity and Story telling; The overall novelty and creativity of the submission. Does the submission present a unique viewpoint on the scientific subject and does it use this viewpoint to explain the concept in a novel way. How is the flow of the narration designed, is it easy to follow for the laymen or is it easy to “get lost” in the explanations.

- Engagement; this judges the level of engangement of the user. How likely are visitors to continue with their own research on the scientific topic after watching the submission. Are there open questions that might provide an interesting point of self-study for the learner. How enganged are they during the watching of the submission?

- Technical excellence; How is the overall production quality of the submission. Are there issues with the frame rate of the movie, are there smooth transitions between scenes, fluid camera movements that aid in watching of the material?

Submission Guidelines

Submissions to the Wisdome Movie Contest are made through the Precision conference system (https://new.precisionconference.com/vgtc). The contest track is named “EuroVis 2020 Wisdome Movie Contest” and is located under the “VGTC” society and the “EuroVis 2020” Conference/Journal grouping.

For the abstract deadline on 2020-03-06, submissions require the following:

- Title

- Complete author list with all affiliations; for people with multiple affiliations, enter each affiliation on a separate line

- Abstract; for the abstract aim at an 100 words description of the content of the submission, its topic, and the targeted audience

For the full deadline on 2020-03-13, submissions require all of the above plus the following:

- PDF document describing the submission in greater detail. This document should include descriptions about the techniques that are used in the video submission, more information about the audience, explanations about the dataset and rendering methods, and more

- A link to the video hosted on a service of your choice. Please ensure that the video will be accessible through the entire process until the event takes place 2020-05-25.

Important Dates

All submission dates are 23:59 UTC. The link to the submission system will be posted on this location at a later stage.

| Date | |

|---|---|

| 2020-03-06 | Abstract Submission Deadline |

| 2020-03-13 | Paper and Movie Submission Deadline |

| 2020-04-13 | Notification |

| 2020-05-25 | Wisdome Contest Date |

Technical Details

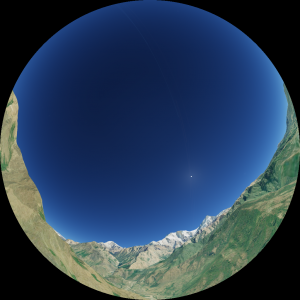

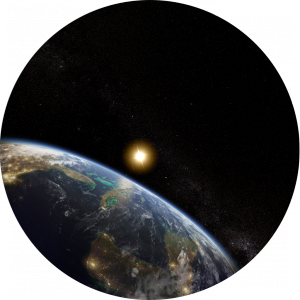

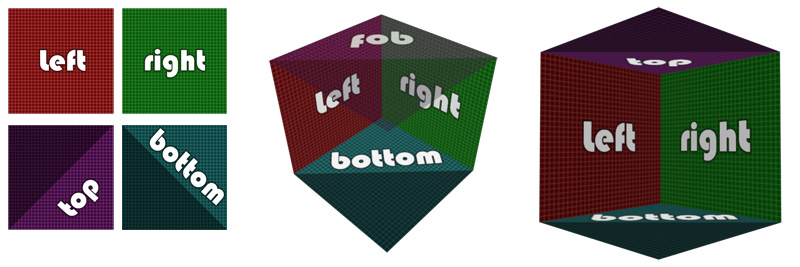

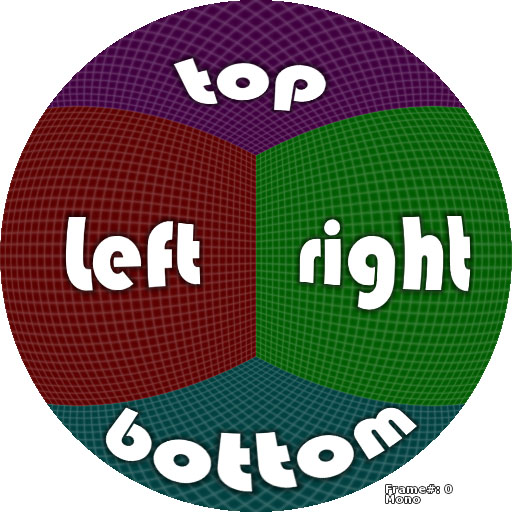

This section contains technical information for preparation of the movie submission. As the target display for all submissions are dome theatres and planetarium domes, video have to be prepared in a 180 degrees angular fisheye projection (http://paulbourke.net/dome/fisheye/). The following two images show examples of this rendering technique.

Submissions to this contest have to be submitted using this projection techniques with a resolution of either 4096×4096 or 8192×8192. The individual frames should be combined into an MP4 file using either H.265/HEVC or H.264/AVC encoding. Optional audio tracks shall be submitted as separate files and also be included in this MP4 file. Submissions are free to use either 2.1, 5.1, or 7.1 audio, which means the submission consists of an MP4 video files and either 3, 6, or 8 individual audio files, one per channel.

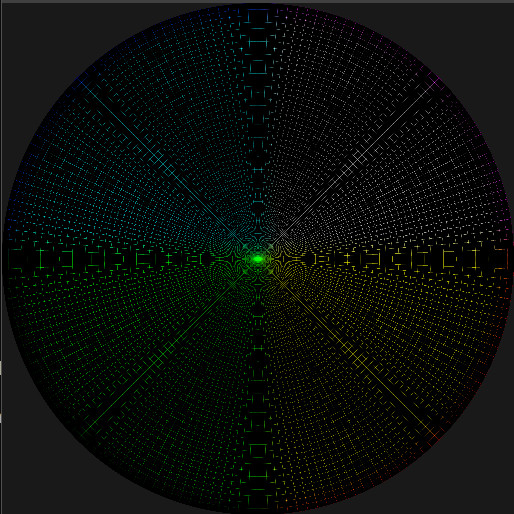

The remainder of this section is split into three parts, which follow the production pipeline for creating the prerendered visual components. Additional information can be found on the accompanying GitLab page (https://gitlab.liu.se/wisdome-contest-2020) and a tutorial page for an in-house developed fisheye stitching tools (http://sgct.itn.liu.se/tutorial/offline).

Fisheye Image Rendering

If the software you are using the create the content for the contest has the ability to generate fisheye images directly, feel free to skip this part of the instructions.

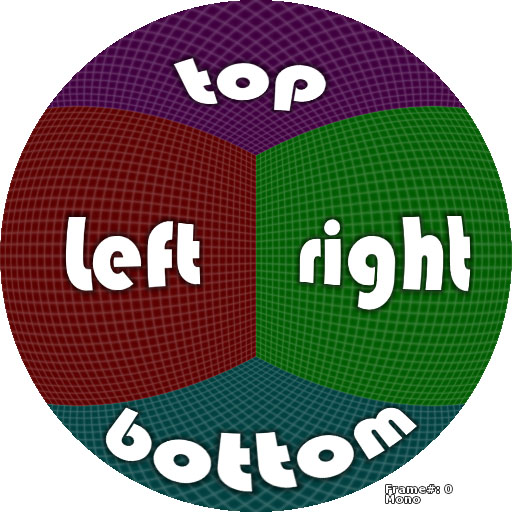

The high-level overview of rendering a fisheye method consists of rendering at 4 separate orthogonal viewpoints. These viewpoints then get merged into a cubemap, which in a second step gets reprojected into the fisheye representation. These two steps are already implemented in the Stitcher application, which is provided below. The following images shows these 4 orthogonal viewpoints as “left”, “right”, “top”, “bottom”. Please note that in the case of this submission (180° fisheye), only half of the “top” and “bottom” are used in the final result.

Whichever software you are using for creating the submission content has to be able to render to a square field-of-view and be able to output those renderings to individual files, such that in the end you have four image sequences of the form:

basefilename_top_00000.png to basefilename_top_36000.png

basefilename_bottom_00000.png to basefilename_bottom_36000.png

basefilename_left_00000.png to basefilename_left_36000.png

basefilename_right_00000.png to basefilename_right_36000.png

With 36000 frames in this case for 10 minute video @ 60 fps (10 [min] * 60 [sec/min] * 60 [frames/sec]).

The stitcher application then coverts these into 180° fisheye images.

These files are of the form:

basefilename_00000.png to basefilename_36000.png

The Stitcher application to transform cubemap images into fisheye projection is available from (http://sgct.itn.liu.se/uploads/stitcher.zip). The application is only provided as a pre-build binary for Windows, but is available as source code on GitHub (https://github.com/opensgct/sgct). The commandline version of the application should be compilable for both MacOS and Linux, the UI is build in C# and probably requires Mono, but is very much untested on non-Windows platforms.

Video Creation

The easiest way to convert an image sequence of the form basefilename_00000.png to basefilename_36000.png into a video is to use the open-source solution FFmpeg (https://www.ffmpeg.org), which is a commandline tool that can be used to convert image sequences into a video format and add multiple audio tracks at the same time. The available options in FFmpeg are too numerous to elaborate, so this guide is not meant as a comprehensive explanation (see the FFmpeg documentation (https://ffmpeg.org/ffmpeg.html) for complete information.

Generating a video from an image sequence

ffmpeg -framerate 60 -i basefilename_%05d.png -i audio.mp3 -c:v libx265 -c:a copy basefilename.mp4

Explanation:

ffmpeg: Name of the commandline tool installed from ffmpeg.org

-framerate 60: Uses 60 of the provided images per second of video runtime

-i basefilename_%05d.png: Search for files basefilename_00000.png to basefilename_99999.png as frame input for the visual part

-i audio.mp3: Use the file audio.mp3 as the input for the sound part of the video

-c:v libx265: use the H.265 video encoding format (alternatively: use libx264 if the size of images is 4096×4096)

-c:a copy: Do not reencode the audio stream but copy instead

basefilename.mp4: The output file that is to be submitted for the contest

Organization

Program chairs

- Alexander Bock, Linköping University & University of Utah

- Erik Sundén, Linköping University

Steering Committee

- Ingrid Hotz, Linköping University

- Patric Ljung, Linköping University

- Niklas Rönnberg, Linköping University

International Program Committee

- Thomas Clifford, Projectleader, Visualizationcenter C

- Konstantin Economou, Lecturer in Media & Communication Studies, Linköping University

- Gabriel Eilertsen, Postdoctorial Researcher, Linköping University

- Carter Emmart, Director for Astrovisualization, American Museum of Natural History

- Jackie Faherty, Senior Scientist, American Museum of Natural History

- Mats Falck, External Relations Coordinator, Umeå University

- Chuck Hansen, Professor of Scientific Visualization, University of Utah

- Ralph Heinsohn, Designer, Curator & Producer of Immersive Media, Hamburg

- Tim Florian Horn Director of the Zeiss-Großplanetarium and Archenhold-Sternwarte

- Ralph Kaehler, Manager of the Visualization Facilities at the Kavli Institute for Particle Astrophysics and Cosmology

- Malcolm Lindsay, Composer, malcolmlindsay.com

- Konrad Polthier, Professor of Mathematical Geometry Processing, Freie Universität Berlin

- Ben Shedd, Director, Producer, Writer, and Professor at the School of Art, Design and Media, Nanyang Technological University

- Mark SubbaRao, President of the International Planetarium Society, Director of Visualization, Adler Planetarium

- Ryan Wyatt, Senior Director of Science Visualization, California Academy of Sciences